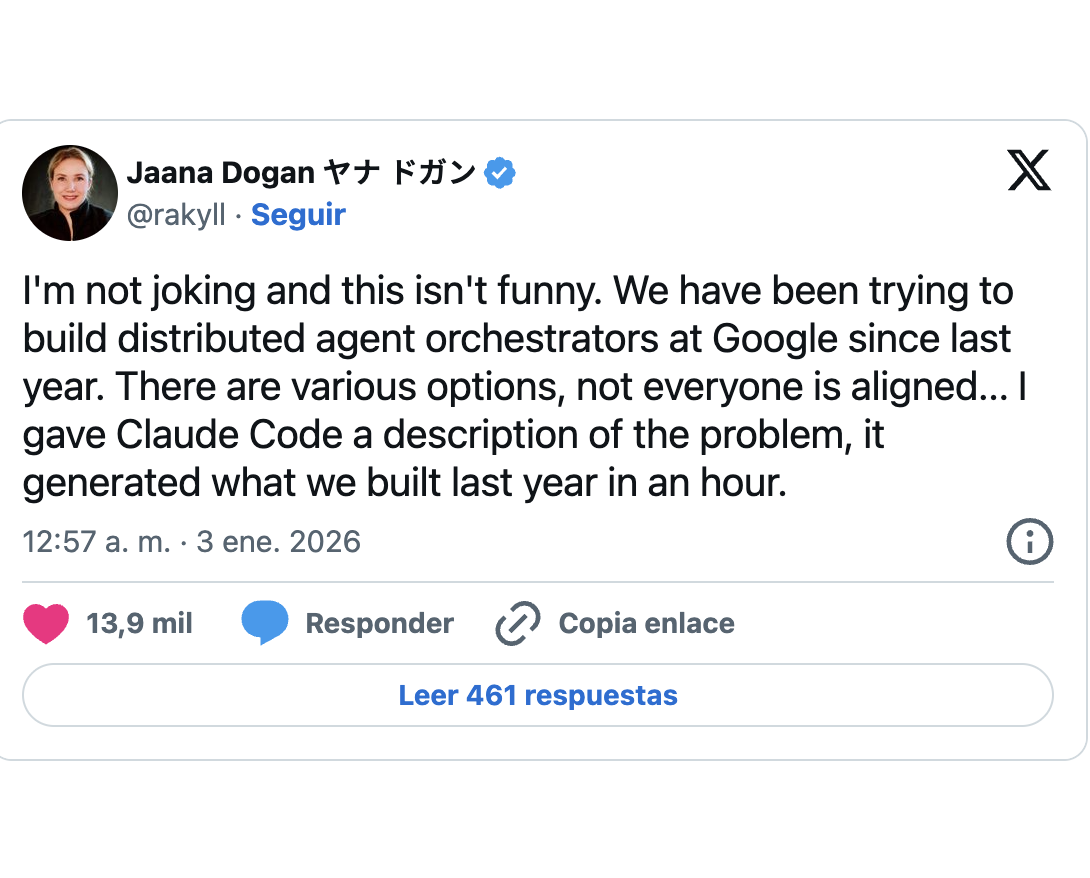

You arrive at your company's weekly meeting and one of your best engineers blurts out: That problem we've been working on for a year... I solved it yesterday in an hour with an AI. It seems like a fable, but this happened this January 2026 at Google. One of its main engineers, Jaana Dogan , published a Tweet that he gave to Claude Code (an AI-based programming assistant from the company Anthropic) the description of a complex problem, and the tool generated in an hour practically the same as his team had taken a year to build !. It was no joke , in fact he said: generated what we built last year in an hour . How is this possible and what does this mean for companies?

Jaana Dogan: Principal Engineer at Google

Jaana Dogan is responsible for the Gemini API (Google's AI), is one of the best in its field and that a principal engineer at Google resorts to a Competitor AI , although Claude is from Anthropic and this company is owned by Google and Amazon, to solve a problem says a lot. In his tweet, Dogan explained that his team had been trying to develop an agent orchestration system (a component for coordinating multiple artificial intelligences) for a year without achieving internal consensus, underscoring the bureaucracy of a large company.

Jaana decided use a simplification of the problem (without confidential data) and proposed it to Claude Code, to see what would happen. Within an hour, the AI generated code comparable to what the Google team had taken a year to build . Dogan acknowledges that the result It's not perfect and it's iterating on it , that is, it needed human refinement, but it still demonstrated the Level of Advancement of these tools.

20% human – 60% machine – 20% human

Three things soon come out of the box:

- AI kills bureaucracy : In large companies (and let's be honest, also in many medium-sized Iberian companies), projects sometimes stop in meetings, approvals and debates. An AI, free of internal politics, gets to the point.

- It is not about replacing people, but about improving skills : Dogan was not replaced by Claude but worked hand in hand with her. AI did the heavy lifting, but it was the Jaana who knew what to ask, how to guide the solution and then validate the result.

- The value of human skills increases : Paradoxically, the more powerful AI is, the more important the people who know how to take advantage of it. Ergo soft skills and the expertise will make a difference.

This story also validates something we have talked about before in this blog: the 20-60-20 model of human-AI collaboration . In any creative or productive process: the First 20% It's work essentially human (define the idea, the course, ask the right question to the AI), the 60% intermediate is where the AI shines (executing repetitive or speed tasks), and last 20% becomes human again (review, fine-tuning, final approval). Isn't that fair what Jaana Dogan did? She put in the idea and context (initial 20%), Claude Code did most of the development (60% central), and now she and her team will iterate and polish the result (final 20%). Collaboration in its purest form.

In the short term: opportunity and caution

This anecdote sends a message: AI tools are already mature enough to solve real problems in record time . For a company that may not have an R+D department, this is a great opportunity because it allows you to cut weeks of work in data analysis, report generation or software prototypes, using AI assistants accessible in the cloud. The most restless employees are probably already tinkering with ChatGPT, Claude or other AIs to streamline their daily tasks, even if management doesn't even know about it. This phenomenon of "Shadow AI" (AI in the shadow) is similar to the Shadow IT : occurs when people adopt the best tools available to be more productive on their own, sometimes bypassing official policies.

Should you be concerned about this as a manager or director? Yes and no. On the one hand, if your team starts using AI on their own, it's because they need to solve problems faster or more creatively than the official route allows. Ignoring or banning it could stifle internal innovation. On the other hand, we must be cautious: using external tools implies sending data abroad. In Europe we have strict regulations (think GDPR) and each company must take care of its intellectual property. In Google's case, Dogan was careful not to expose confidential details when doing its test, and yet they only allow it to use Claude in open-source projects, not in internal products. The company must establish clear policies: what kind of data can be passed to a public AI? What approvals are required?

In the medium term: changing the way we work and compete

In the medium term, the implications become more strategic. If this is the situation in 2026, what will 2028 look like? Medium-sized companies that embrace the Human-machine collaboration they will have a competitive advantage. What changes can we anticipate?

- Official integration of AI into workflows : What is experimental today will be standard in a couple of years. There are already leading companies reporting that a huge portion of their new code is being written by AI (Anthropic claims 90%, Google 50%, Microsoft 30% code generation).

- New competencies and roles : Just as the role of Community Manager with the explosion of social media, the age of AI will bring new profiles. Training and retraining will be key in the medium term. Here the 20-60-20 approach comes into play again: training must emphasize that initial and final 20% where the human makes the difference (creativity, judgment, supervision), leaving the intermediate 60% technology to do more and more.

- Culture of collaboration and change : It requires clear leadership that communicates that AI is an ally. When people understand that collaborating with the machine takes away mechanical work and allows them to shine creatively, resistance turns into enthusiasm.

In the long term: the transformation of the business model

If we look at in the long term , let's say 5 to 10 years from now, companies could operate in ways that today are difficult to imagine but where this trend clearly points:

- Smaller, more productive organizations : If an AI can do in one hour what previously required a year of a team, it is worth asking how many things could be automated or accelerated. People will be dedicated to designing strategies, customer relations, innovation, while the "armies" of execution could be largely digital.

- New competitive balance : In the long term, we will probably see Human-machine ecosystems competing with each other: the company that has achieved the best internal synergy between its human experts and its powerful AI systems will be the one that sets the pace.

- Ethics, trust and brand : Companies that use AI must do so in a transparent and accountable manner. In Europe especially, the regulatory framework (such as the imminent AI Act ) will demand that we can explain the decisions made with AI and guarantee certain values.